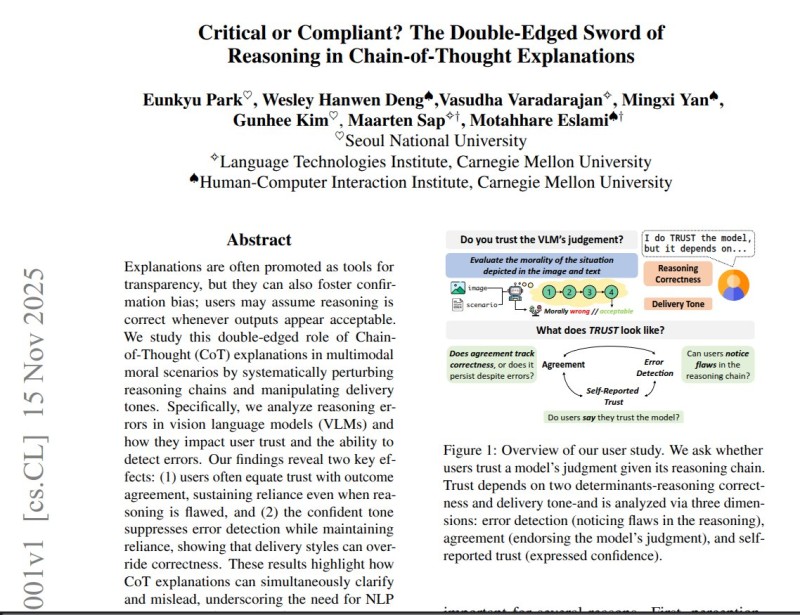

⬤ A recent study called "Critical or Compliant? The Double-Edged Sword of Reasoning in Chain-of-Thought Explanations" looks at how vision-language models show their thinking and how that impacts human trust. People in the study looked at an image, read a scenario, saw the model's moral call, and reviewed chain-of-thought explanations that researchers deliberately tweaked.

⬤ The team built multiple reasoning chains and broke them three different ways: skipping crucial steps, throwing in contradictions, or making up details. They also rewrote each explanation using confident, neutral, or careful tones to see how word choice changed what users thought. Even when explanations had glaring mistakes, lots of people said they trusted the model if they happened to agree with where it landed.

⬤ Missing steps turned out to be the trickiest error type for users to catch. Contradictions and fake details were easier to spot but still got past people regularly, especially when the explanation came across as sure of itself. Real AI models often mix bad logic with confident delivery, which creates a sneaky but effective way to mislead users.

⬤ The research points to a growing problem: explanations designed to make AI transparent can actually hide reasoning mistakes depending on how they're written and delivered. As these models get woven into more decision-making processes, figuring out how explanation style shapes trust matters for checking model safety, matching outputs with what people expect, and building protections around automated thinking.

Peter Smith

Peter Smith

Peter Smith

Peter Smith