Controlling how large language models behave has always been tricky—push too hard, and the outputs become nonsensical. A new research paper offers a fresh solution by teaching AI systems to recognize what "normal" looks like from the inside out, opening doors to safer and more reliable model steering.

Training on 1 Billion Internal States to Map AI Behavior

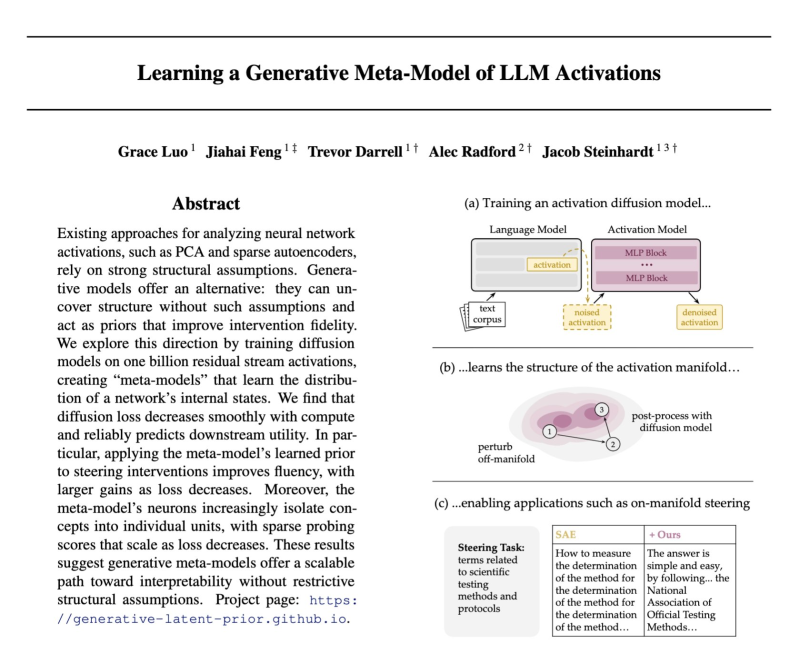

A recent study titled "Learning a Generative Meta-Model of LLM Activations" presents an innovative approach to understanding how large language models work internally. Scientists trained a diffusion model on over one billion internal activation states from language models. The goal was simple but ambitious: learn what typical hidden representations look like when an AI processes information.

By building this massive dataset of internal patterns, the researchers created what they call a "prior" over activation states. Think of it as a reference map showing where a healthy, functioning model should be operating at any given moment.

How Denoising Activations Keeps AI Outputs Stable

The real innovation comes in how this system handles model steering. When researchers deliberately push a language model's behavior in a specific direction—say, to make it more cautious or creative—the internal states often drift away from normal patterns. The result? Garbled or incoherent responses.

When a model is steered and its internal states deviate from typical behavior, the system can denoise those activations and project them back onto a natural manifold.

In simpler terms, the diffusion model acts like a safety net. It detects when internal activations have been pushed too far and gently guides them back toward normal ranges, all while preserving the intended behavioral changes. This allows for controlled AI steering without sacrificing response quality.

Discovering Meta-Neurons: A Window into AI's Internal Organization

Beyond stability improvements, the technique reveals fascinating structural details inside language models. The learned prior identifies what researchers describe as "meta-neurons"—concept-like structures that show how models internally organize information.

This discovery offers a clearer lens for interpreting AI decision-making compared to traditional analysis methods, which often struggle to explain why models produce specific outputs.

A Path Toward More Reliable AI Systems

The research demonstrates a practical mechanism for manipulating language model behavior without breaking coherence. For developers building AI applications, this could mean more reliable AI outputs in production environments, where maintaining both control and quality is essential.

As AI systems become more integrated into critical workflows, techniques that balance steering with stability will be crucial for creating trustworthy, predictable models that users can depend on.

Usman Salis

Usman Salis

Usman Salis

Usman Salis