⬤ Liquid AI's fresh LFM2.5 series brings serious upgrades to small-scale AI. These on-device models are built for speed, reliability, and handling multiple data types without needing cloud connections. The company trained them on 28 trillion tokens (up from 10 trillion) and threw in extra reinforcement learning to make them better at following instructions. The goal? Smarter AI agents that work right on your phone, laptop, or wearable.

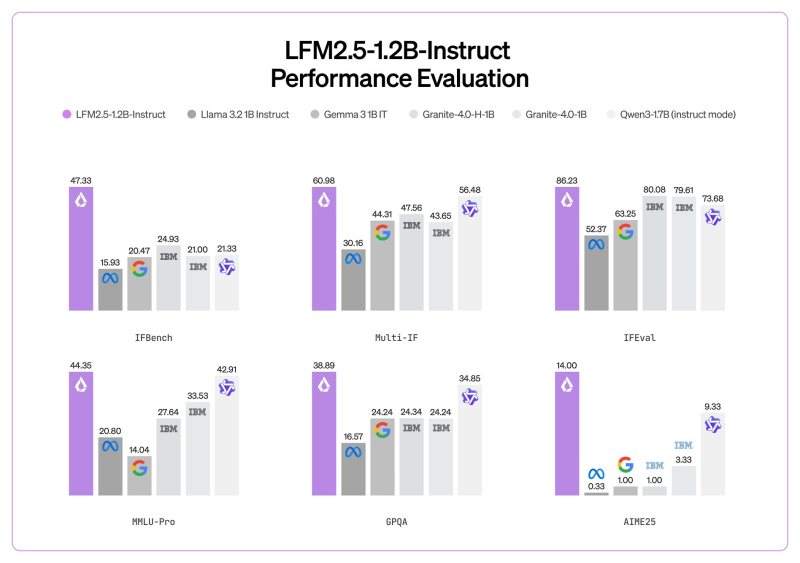

⬤ The numbers look promising. LFM2.5-1.2B-Instruct beat competitors like Llama 3.2 1B, Gemma 3 1B, Granite-4.0-1B, and Qwen3-1.7B across the board. It scored 47.33 on IFBench, 60.98 on Multi-IF, 86.23 on IFEval, 44.35 on MMLU-Pro, 38.89 on GPQA, and 14.00 on AIME25—consistently topping the charts for models in its size class.

⬤ What makes LFM2.5 different is its focus on running locally. That means faster responses, better privacy, and no constant need for internet connectivity. Liquid AI built on their earlier LFM2 tech and added support for more data types plus better real-world consistency through advanced tuning methods.

⬤ This fits a bigger shift in AI development. More companies are moving models onto devices instead of keeping everything in the cloud. LFM2.5 shows you can get strong performance without the bulk, which opens doors for smarter features on phones, smartwatches, and other everyday gadgets without compromising your data or waiting for server responses.

Saad Ullah

Saad Ullah

Saad Ullah

Saad Ullah