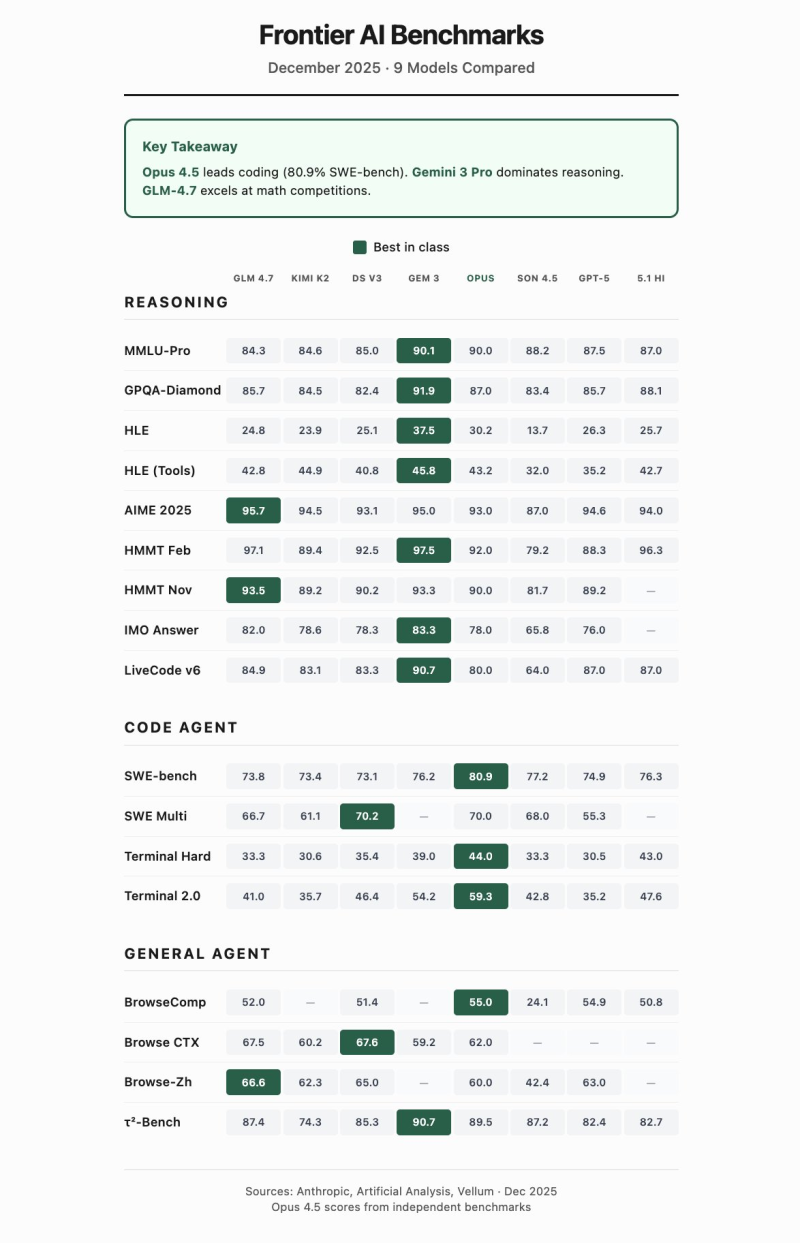

⬤ GLM 4.7 has topped the charts as 2025's leading open-source AI model, backed by fresh Frontier AI Benchmark results comparing nine major players across reasoning, coding, and general agent tasks. The model hit 73.8% on SWE-bench—a milestone closed-source systems reached just six months ago—signaling open-source AI is catching up faster than many expected.

⬤ GLM 4.7 dominated math and reasoning evaluations, posting 95.7% on AIME 2025, 97.1% on HMMT February, and 93.5% on HMMT November—scores that beat most competitors in these categories. While Opus 4.5 led coding benchmarks and Gemini 3 Pro excelled in select reasoning tasks, GLM 4.7's strength lies in its consistency, ranking near the top across the board rather than specializing in just one area.

⬤ Beyond raw performance, GLM 4.7 delivers serious value: $0.6 per million input tokens, $2.2 per million output tokens, a 200,000-token context window, and 70 tokens per second processing speed. That combination makes it a practical choice for developers who want top-tier results without paying premium prices or compromising on speed and scale.

⬤ The bigger picture? Open-source models have closed the performance gap dramatically. Just months ago, proprietary systems held a clear edge—now several open alternatives are neck-and-neck on critical benchmarks. Organizations weighing cost, transparency, and deployment flexibility will likely take notice, as open-source solutions cement their place in serious AI development.

Usman Salis

Usman Salis

Usman Salis

Usman Salis