⬤ Stanford University's latest Foundation Model Transparency Index shows a troubling trend: most major AI developers are becoming less transparent about how their systems work. The index measures how openly companies share critical details about their flagship models—everything from training data sources and risk controls to system limitations and economic impact. Each company gets scored out of 100, creating one of tech's most comprehensive snapshots of AI accountability.

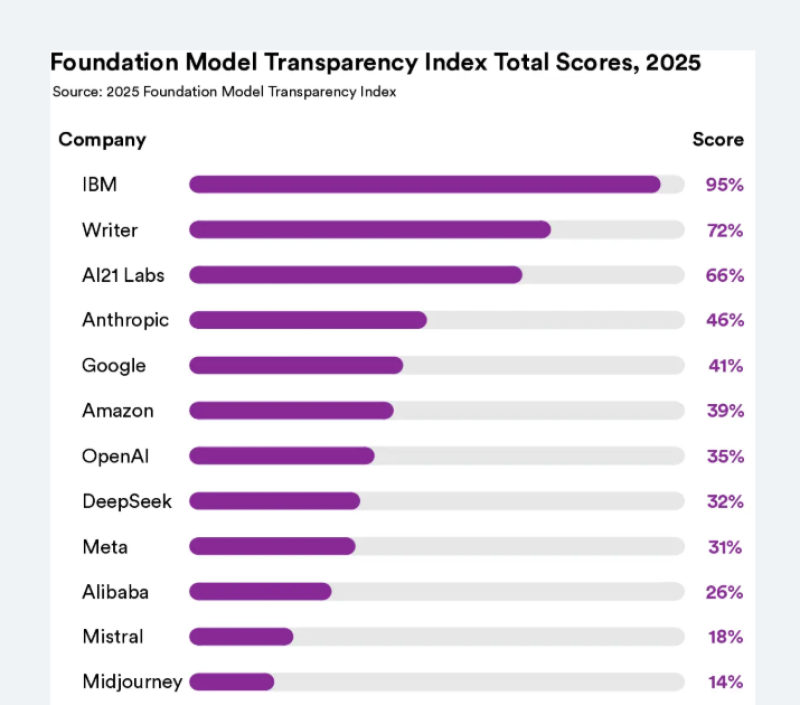

⬤ IBM dominated the 2025 rankings with a 95% score—the highest mark since the index launched. What set IBM (NYSE: IBM) apart? It's the only company that gave external researchers enough detail to actually recreate its training data process and access those datasets for independent review. Writer AI came in second with 72%, followed by AI21 Labs at 66%. The rest of the pack lagged far behind: Anthropic scored 46%, Google hit 41%, Amazon reached 39%, OpenAI managed 35%, DeepSeek got 32%, Meta landed at 31%, Alibaba scored 26%, Mistral came in at 18%, and Midjourney brought up the rear with just 14%.

⬤ Here's the catch: even with IBM's standout performance, the industry as a whole is sliding backward. Stanford's researchers found that transparency around fundamental questions—how models get trained, how companies control risks, who depends on these systems economically—has actually dropped compared to previous years. This is happening just as AI tools are exploding across businesses, consumer apps, and government operations. The index exists to give regulators, researchers, and regular people a consistent way to track whether AI companies are being straight about the risks and mechanics of their technology.

⬤ The results show just how inconsistent transparency remains across Big Tech and AI startups. IBM's commanding lead—combined with middling scores from giants like Google and Amazon—proves the industry hasn't settled on basic disclosure standards yet. As AI keeps spreading into more areas of life, these transparency ratings will likely carry more weight. They could shape future regulations, influence customer decisions, and define what "responsible AI" actually means in practice.

Sergey Diakov

Sergey Diakov

Sergey Diakov

Sergey Diakov